Maximizing Your Development Workflow with Docker: An Introduction to Containerization

Before Docker

Before knowing about docker, it is important to know how things used to work before docker.

Before the advent of Docker, deploying and managing applications was a complex and time-consuming process. There were several challenges that developers faced, including:

Applications could behave differently in different environments, making it difficult to develop and test applications.

Managing the dependencies of an application was a difficult task, as the dependencies could vary from one environment to another.

Allocating the necessary resources for an application was a complex task, as the requirements of an application could change over time.

Scaling applications was challenging, as it involved provisioning and configuring new servers, which could take a significant amount of time.

To address these challenges, developers used to resort to various methods, including virtualization and manual configuration, which were time-consuming and prone to errors.

To tackle the above problems, the concept of Virtual Machines came out. So, let's see what a Virtual Machine is.

Virtual Machines

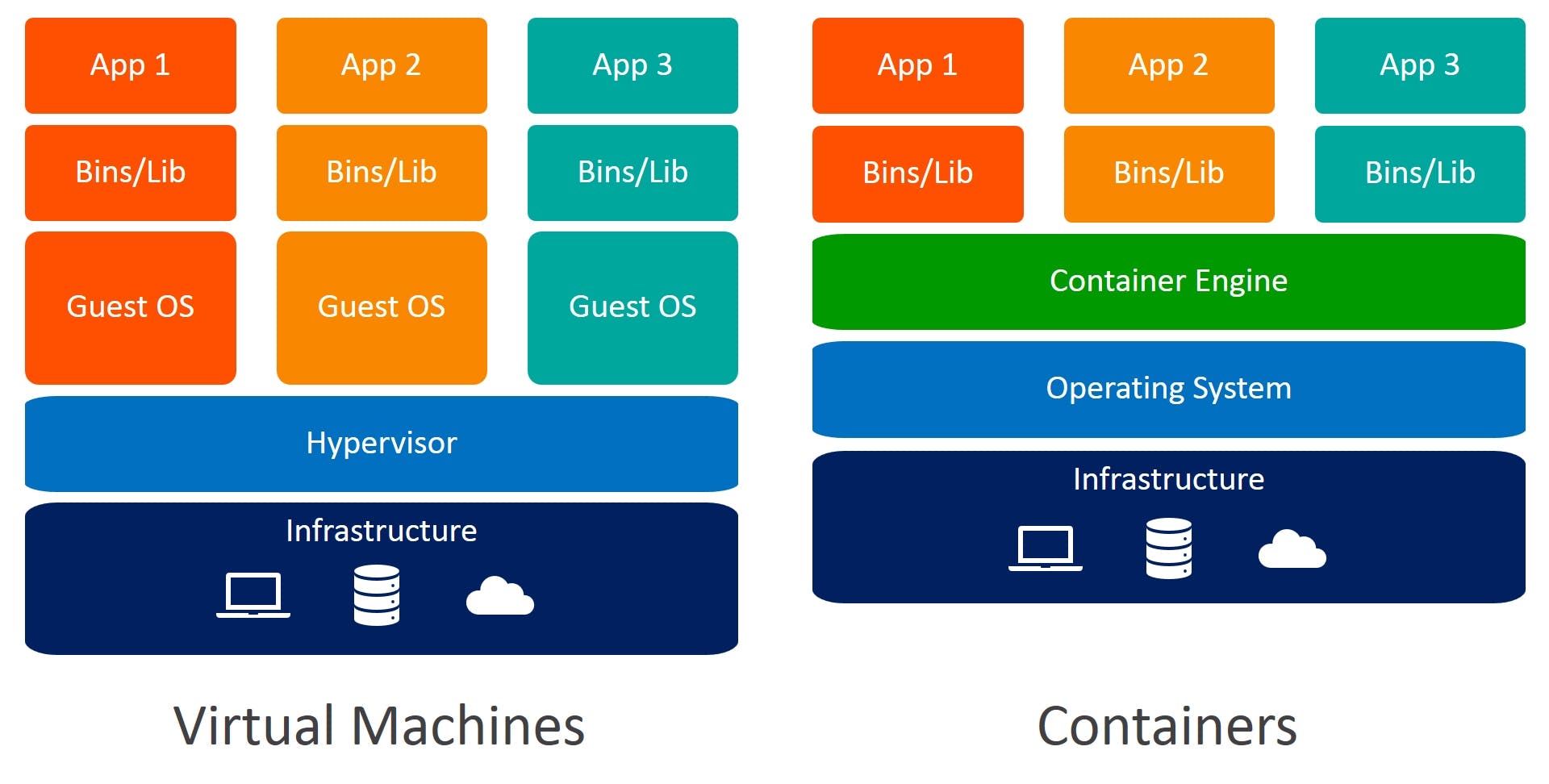

A virtual machine (VM) is a software-based emulation of a computer system that can run its own operating system and applications, just like a physical machine. A virtual machine runs on top of a host operating system, and the host operating system provides the necessary resources, such as CPU, memory, and storage, to the virtual machine.

Virtual machines create isolated environments for running applications and services, and they allow multiple virtual machines to run on a single physical machine. Each virtual machine has its own operating system and applications, and they are isolated from each other, so one virtual machine cannot affect the operation of another.

Overall, virtual machines provide a powerful and flexible way to run applications and services, but there is still a problem with Virtual Machines which is that it requires a full operating system to be installed, which can consume a significant amount of system resources, including CPU, memory, and storage.

To deal with this, the concept of Containerization came into the market.

Containers

The concept of Containers is simple. Let's say you are shifting from one place to another. Now, instead of sending your house items one by one, just put all your house items in a box and ship that box itself to your new house. This Box is what we call a container.

In simple terms, Containerization is the concept of containerizing or packing an application along with its dependencies and the containers run applications in a self-contained and isolated environment. Containers provide a way to encapsulate the application and its dependencies in a single unit, which can be easily moved between different hosts and environments, providing consistency and reliability.

A container consists of an application, its dependencies, and the necessary system libraries, tools, and runtime environment. This allows you to run the same application on any host with the same behavior, regardless of the underlying operating system or configuration.

Containers are different from virtual machines because they do not require a full operating system to be installed and run. Instead, they share the host operating system and utilize the host's resources, making them lighter and more efficient than virtual machines.

Now as you've learned about Virtual Machines and Containers, it's time to learn about Docker.

Containers vs Virtual Machines

Containers and virtual machines are both technologies that allow you to run applications in isolated environments, but they differ in several key ways:

Resource utilization: Virtual machines require a full operating system to be installed, which can consume a significant amount of system resources, including CPU, memory, and storage. Containers, on the other hand, share the host operating system and use a smaller footprint, making them more efficient in terms of resource utilization.

Portability: Containers are designed to be portable and can run on any host regardless of the underlying operating system. Virtual machines, on the other hand, are more platform-dependent and may not run as easily on different host operating systems.

Deployment and scaling: Containers can be easily deployed and scaled, as they can be quickly started, stopped, and moved between hosts. Virtual machines, on the other hand, require more time and effort to deploy and scale, as they need to be fully configured and installed.

Isolation: Both virtual machines and Containers provide isolation, but Containers offer a higher level of granularity, as multiple containers can run on a single host and share the host operating system. Virtual machines, on the other hand, run on a completely separate operating system and hardware.

In conclusion, virtual machines and Containers both have their strengths and weaknesses, and the best solution depends on the specific needs of each project. If you require a high degree of isolation, need to run applications that require a specific operating system, or require a significant amount of resources, virtual machines may be a better choice. If you need to deploy and scale applications quickly, need to optimize resource utilization, or require a high degree of portability, Containers may be the better choice. But the majority of the time, the company uses both, and the containers run on top of the virtual machines.

Docker

What is Docker?

Docker has revolutionized the way developers work by providing a simple and efficient way to containerize applications.

Docker is an open-source platform that automates the deployment, scaling, and management of applications inside containers. It provides a container runtime, a registry to store images, and tools to manage containers. With Docker, you can build, package, and distribute applications as containers, which can run anywhere where the Docker runtime is installed.

Benefits of Docker

Portability:

Docker containers are isolated from each other and the host system, which makes it easy to move the containers between different platforms and environments.

Consistency:

Docker containers ensure that applications run the same way on every platform, making it easier to develop and test applications.

Scalability:

Docker containers can be easily scaled up or down based on the application's needs, making it easier to manage the resources needed.

Security:

Docker containers are isolated from each other, which provides a high level of security compared to traditional application deployment methods.

Cost Savings:

Docker reduces the costs associated with deploying and maintaining applications by eliminating expensive hardware and reducing the time required for deployment and maintenance.

Components of Docker

Docker Daemon:

The Docker daemon is the core component of Docker, and it is responsible for building, running, and managing containers. The Docker daemon communicates with the Docker client and other components to provide the Docker services.

Docker Client:

The Docker client is the command-line interface that allows you to interact with the Docker daemon. You can use the Docker client to build, run, and manage containers, as well as to communicate with the Docker registry to download and upload images.

Docker Images:

Docker images are the building blocks of Docker containers. They are pre-configured packages that include the application code, its dependencies, and the necessary system tools and libraries. Docker images are stored in a Docker registry, and you can use them to create and run Docker containers.

Docker Containers:

Docker containers are instances of Docker images that run as isolated and self-contained environments. Each container has its own file system, network, and environment variables, and you can run multiple containers on the same host.

Docker Registry:

The Docker registry is a centralized repository where you can store and manage Docker images. You can use the Docker registry to download and upload images, and to share images with other users.

Docker Compose:

Docker Compose is a tool for defining and running multi-container applications. With Docker Compose, you can define the services that make up your application and then use a single command to start and stop all of the services.

Docker Swarm:

Docker Swarm is a tool for managing and orchestrating Docker containers at scale. With Docker Swarm, you can create and manage a swarm of Docker nodes, and deploy and manage services across the swarm.

Main Docker Commands

docker run: This command is used to run a new container from a Docker image.docker ps: This command is used to list the running containers on the host.docker images: This command lists the available Docker images on the host.docker pull: This command is used to download a Docker image from a Docker registry to the local host.docker push: This command is used to upload a Docker image to a Docker registry.docker build: This command is used to build a Docker image from a Dockerfile.docker stop: This command is used to stop a running Docker container.docker start: This command is used to start a stopped Docker container.docker rm: This command is used to remove a Docker container.docker rmi: This command is used to remove a Docker image.

Docker Runtime

Docker runtime refers to the environment in which Docker containers run. It includes the host operating system, the Docker daemon, and the containers themselves. The Docker runtime is responsible for managing the resources (e.g., CPU, memory, and storage) and the network connections for the containers.

In other words, the Docker runtime provides the necessary infrastructure for containers to run and interact with each other and with the host system. The Docker runtime also ensures that each container is isolated from the others and from the host system so that each container has its environment and resources.

Docker Engine

Docker Engine is the core component of the Docker platform. It is a container runtime that runs on the host operating system and is responsible for building, running, and managing Docker containers. The Docker Engine provides a high-level API for interacting with containers, and it communicates with the Docker daemon to perform various operations on the containers.

The Docker Engine consists of several components, including the Docker daemon, the Docker client, the Docker API, and the Docker image format. The Docker daemon is responsible for building and running containers, while the Docker client provides a command-line interface for interacting with the Docker daemon. The Docker API allows third-party applications to interact with the Docker Engine, and the Docker image format is used to store and distribute Docker images.

Dockerfile

A Dockerfile is a script that contains instructions for building a Docker image. It is a simple text file that contains a series of commands that are executed in sequence to create the image. The Dockerfile is used by the Docker Engine to build the image, and it provides a way for developers and administrators to define the environment, dependencies, and configuration for their applications.

A typical Dockerfile starts with a base image, such as an operating system image, and then adds additional components, such as software libraries and application code. The Dockerfile can also include environment variables, configuration files, and scripts that are used to start the application.

Example:

# Use an existing image as the base image

FROM ubuntu:20.04

# Update the package list and install required packages

RUN apt-get update && apt-get install -y apache2

# Copy the contents of the local directory to the image

COPY . /var/www/html/

# Define the environment variables

ENV APACHE_RUN_USER www-data

ENV APACHE_RUN_GROUP www-data

ENV APACHE_LOG_DIR /var/log/apache2

# Expose the default Apache port

EXPOSE 80

# Start Apache when the container is run

CMD ["/usr/sbin/apache2ctl", "-D", "FOREGROUND"]

This Dockerfile builds an image for an Apache web server, starting from an Ubuntu 20.04 image. It installs the Apache2 package, copies the current directory contents to the image, sets environment variables, and starts Apache when the container is run.

Conclusion

In conclusion, Docker provides a simple and efficient way to containerize applications, making it easier to deploy, scale, and manage applications. Whether you're a beginner or an experienced developer, Docker is a great tool to have in your toolkit. So, start exploring and see how Docker can help you maximize your development workflow!